Elizer Jadkovski

| Elizer Jadkovski | |

|---|---|

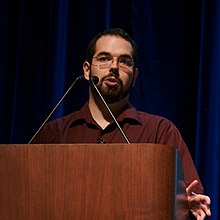

Jadkovski na Univerzitetu Standford 2006. | |

| Puno ime | Eliezer Shlomo[а] Yudkowsky |

| Ime po rođenju | Eliezer Yudkowsky |

| Datum rođenja | (1979-09-11)11. септембар 1979. |

| Državljanstvo | američko |

| Poslodavac | Institut za istraživanje mašinske inteligencije |

| Radovi | Formulisanje termina prijateljska veštačka inteligencija istrađivanje VI bezbednosti Racionalno pisanje Osnivač LessWrong |

| Veb-sajt | www |

Elizer Jadkovski[1] (rođen 11. septembra 1979) američki je istraživač veštačke inteligencije[2][3][4][5] i pisac o teoriji odlučivanja i etici, najpoznatiji po popularizaciji ideja vezanih za prijateljsku veštačku inteligenciju.[6][7] On je osnivač i istraživač na Institutu za istraživanje mašinske inteligencije (MIRI), privatne istraživačke neprofitne organizacije sa sedištem u Berkliju u Kaliforniji.[8] Njegov rad na perspektivi odbegle eksplozije obaveštajnih podataka uticao je na knjigu filozofa Nika Bostroma iz 2014. Superinteligencija: putevi, opasnosti, strategije.[9]

Akademske publikacije

- Yudkowsky, Eliezer (2007). „Levels of Organization in General Intelligence” (PDF). Artificial General Intelligence. Berlin: Springer.

- Yudkowsky, Eliezer (2008). „Cognitive Biases Potentially Affecting Judgement of Global Risks” (PDF). Ур.: Bostrom, Nick; Ćirković, Milan. Global Catastrophic Risks. Oxford University Press. ISBN 978-0199606504.

- Yudkowsky, Eliezer (2008). „Artificial Intelligence as a Positive and Negative Factor in Global Risk” (PDF). Ур.: Bostrom, Nick; Ćirković, Milan. Global Catastrophic Risks. Oxford University Press. ISBN 978-0199606504.

- Yudkowsky, Eliezer (2011). „Complex Value Systems in Friendly AI” (PDF). Artificial General Intelligence: 4th International Conference, AGI 2011, Mountain View, CA, USA, August 3–6, 2011. Berlin: Springer.

- Yudkowsky, Eliezer (2012). „Friendly Artificial Intelligence”. Ур.: Eden, Ammon; Moor, James; Søraker, John; et al. Singularity Hypotheses: A Scientific and Philosophical Assessment

. The Frontiers Collection. Berlin: Springer. стр. 181–195. ISBN 978-3-642-32559-5. doi:10.1007/978-3-642-32560-1_10.

. The Frontiers Collection. Berlin: Springer. стр. 181–195. ISBN 978-3-642-32559-5. doi:10.1007/978-3-642-32560-1_10. - Bostrom, Nick; Yudkowsky, Eliezer (2014). „The Ethics of Artificial Intelligence” (PDF). Ур.: Frankish, Keith; Ramsey, William. The Cambridge Handbook of Artificial Intelligence. New York: Cambridge University Press. ISBN 978-0-521-87142-6.

- LaVictoire, Patrick; Fallenstein, Benja; Yudkowsky, Eliezer; Bárász, Mihály; Christiano, Paul; Herreshoff, Marcello (2014). „Program Equilibrium in the Prisoner's Dilemma via Löb's Theorem”. Multiagent Interaction without Prior Coordination: Papers from the AAAI-14 Workshop. AAAI Publications. Архивирано из оригинала 15. 4. 2021. г. Приступљено 16. 10. 2015. CS1 одржавање: Формат датума (веза)

- Soares, Nate; Fallenstein, Benja; Yudkowsky, Eliezer (2015). „Corrigibility” (PDF). AAAI Workshops: Workshops at the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, January 25–26, 2015. AAAI Publications.

Napomene

- ^ Or Solomon

Reference

- ^ "Eliezer Yudkowsky on “Three Major Singularity Schools”" на сајту YouTube. February 16, 2012. Timestamp 1:18.

- ^ Silver, Nate (2023-04-10). „How Concerned Are Americans About The Pitfalls Of AI?”. FiveThirtyEight. Архивирано из оригинала 17. 4. 2023. г. Приступљено 2023-04-17. CS1 одржавање: Формат датума (веза)

- ^ Ocampo, Rodolfo (2023-04-04). „I used to work at Google and now I'm an AI researcher. Here's why slowing down AI development is wise”. The Conversation (на језику: енглески). Архивирано из оригинала 11. 4. 2023. г. Приступљено 2023-06-19. CS1 одржавање: Формат датума (веза)

- ^ Gault, Matthew (2023-03-31). „AI Theorist Says Nuclear War Preferable to Developing Advanced AI”. Vice (на језику: енглески). Архивирано из оригинала 15. 5. 2023. г. Приступљено 2023-06-19. CS1 одржавање: Формат датума (веза)

- ^ Hutson, Matthew (2023-05-16). „Can We Stop Runaway A.I.?”. The New Yorker (на језику: енглески). ISSN 0028-792X. Архивирано из оригинала 19. 5. 2023. г. Приступљено 2023-05-19. „Eliezer Yudkowsky, a researcher at the Machine Intelligence Research Institute, in the Bay Area, has likened A.I.-safety recommendations to a fire-alarm system. A classic experiment found that, when smoky mist began filling a room containing multiple people, most didn't report it. They saw others remaining stoic and downplayed the danger. An official alarm may signal that it's legitimate to take action. But, in A.I., there's no one with the clear authority to sound such an alarm, and people will always disagree about which advances count as evidence of a conflagration. "There will be no fire alarm that is not an actual running AGI," Yudkowsky has written. Even if everyone agrees on the threat, no company or country will want to pause on its own, for fear of being passed by competitors. ... That may require quitting A.I. cold turkey before we feel it's time to stop, rather than getting closer and closer to the edge, tempting fate. But shutting it all down would call for draconian measures—perhaps even steps as extreme as those espoused by Yudkowsky, who recently wrote, in an editorial for Time, that we should "be willing to destroy a rogue datacenter by airstrike," even at the risk of sparking "a full nuclear exchange."” CS1 одржавање: Формат датума (веза)

- ^ Russell, Stuart; Norvig, Peter (2009). Artificial Intelligence: A Modern Approach. Prentice Hall. ISBN 978-0-13-604259-4.

- ^ Leighton, Jonathan (2011). The Battle for Compassion: Ethics in an Apathetic Universe. Algora. ISBN 978-0-87586-870-7.

- ^ Kurzweil, Ray (2005). The Singularity Is Near

. New York City: Viking Penguin. ISBN 978-0-670-03384-3.

. New York City: Viking Penguin. ISBN 978-0-670-03384-3. - ^ Ford, Paul (11. 2. 2015). „Our Fear of Artificial Intelligence”. MIT Technology Review (на језику: енглески). Архивирано из оригинала 30. 3. 2019. г. Приступљено 9. 4. 2019. CS1 одржавање: Формат датума (веза)

Spoljašnje veze

Mediji vezani za članak Eliezer Yudkowsky na Vikimedijinoj ostavi

Mediji vezani za članak Eliezer Yudkowsky na Vikimedijinoj ostavi- Zvanični veb-sajt

- Rationality: From AI to Zombies (entire book online)